I built a web scraper to get leads off a directory, here's how I built this

Hi,

Yesterday I found a job prompt on Upwork that required scraping of black tax professional leads from a website. I felt I could handle it.

So I wanted to apply for the job but first, I had to prove to myself that I could build a web scraper that can compile those leads

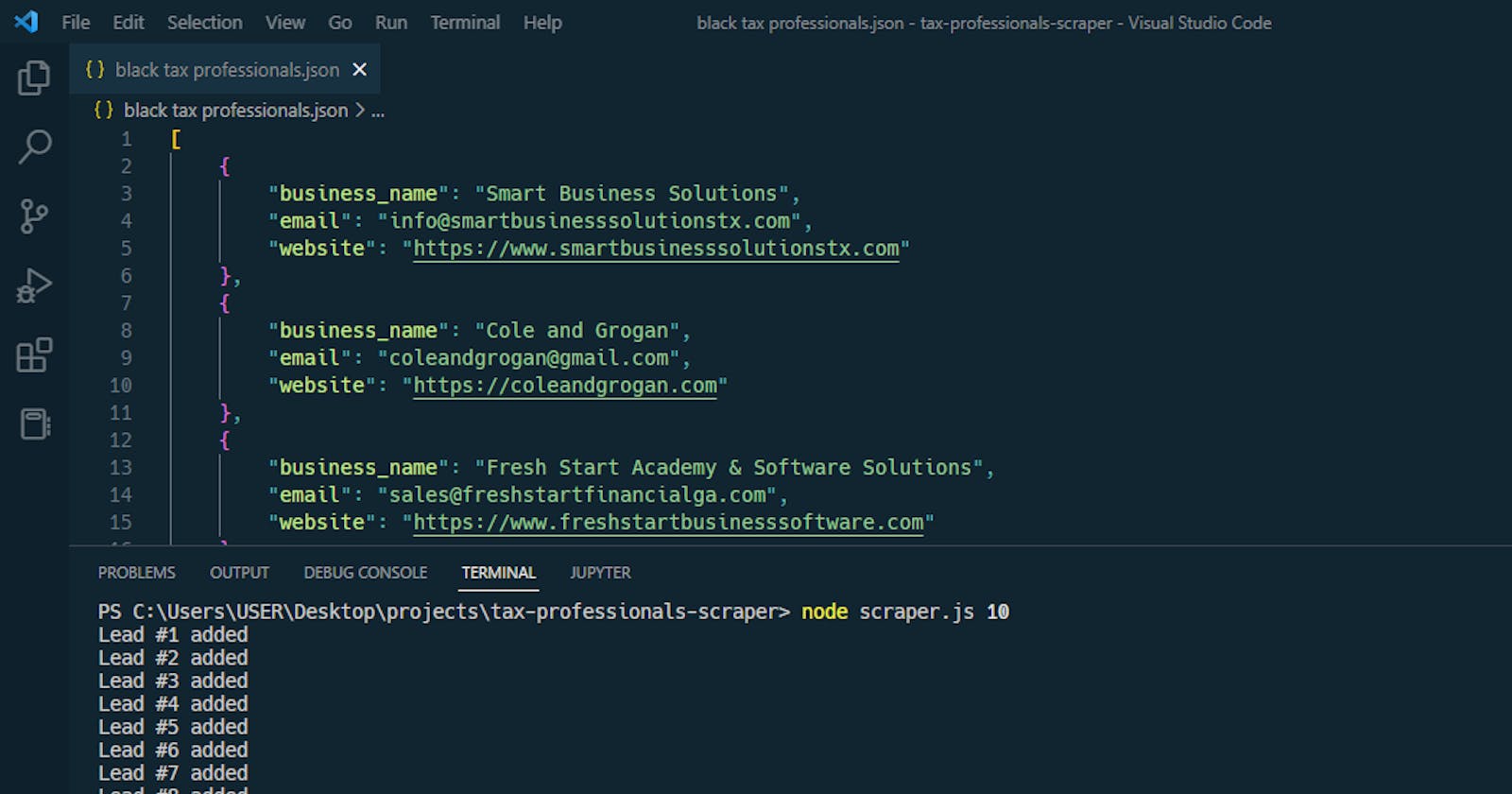

This is the prototype I built

Overview

I built a script that scraped the contacts of black tax professionals from a website. The task was to compile them into a google sheet, but I had them written to a JSON file instead which I manually converted online and attached to my proposal.

The challenge

The script (or bot) should visit the target website

It should scrape the business name, email and website from each lead on the directory by visiting the page for that lead

It should export that data to a google sheet

My solution

The script (or bot) visits the page of a lead on the target website

It scrapes the business name, email and website from that page and adds it to an array

It then gets a link (A "Next" button) pointing to the next lead on the directory

It repeats the steps 1 - 3 for the amount of leads needed to be scraped

Lastly the script saves the leads as a json file which i took and manually uploaded to google sheets

Links

- Solution URL: View on Github

My process

I started the script basing it off a former scraper i built for myself, so i had a good setup for this kind of data harvesting

Initially i assumed the website was rendered dynamically with javascript so i pulled up puppeteerJS and started automating my browser to visit the website.

To scrape the target website successfully, i had to structure my scraper to how the website was structured, the website is a simple directory that showcases black tax professionals on a grid with pagination at the bottom, there were about 53 pages of information to be scraped.

Also each listing on the website lead to a page about the tax professional showing their name and contact details and had a link at the bottom of the page linking to the next tax professional on the directory. Neat

I had done a pagination scraper before, but i knew i didn't want to do the whole job for the client off the bat (I was still trying to get hired after all 😅).

So I built something lightweight that simply visited a black professional's page (Using puppeteer), scraped the information i needed (using cheerio) and visited the next black professional within a loop which runs for the number of leads i wanted (I submitted 20 leads as a sample in my proposal).

But it felt slow, i was scrpaing leads at a slow rate, and jobs like these expected speed, so i went back to the website and found out it was a server-rendered application. So i improved the speed by skipping puppeteer and loading the website as html using isomorphic-fetch and scraped the data i needed.

At the end the script was scraping leads at 1 lead per second (Sometimes longer, sometimes shorter due to varying internet speed)

After the scraping is done, the data is converted to json and saved as a file named black tax professionals.json which i attached to my proposal when submitting it

And that's how i built this project

Built with

JavaScript [NodeJS]

CheerioJS (DOM Parser)

PuppeteerJS (Browser automation tool)

What I learned

I learnt a ton of new things:

Learnt how to use command-line arguments to control a program

Learnt how to scrape static sites using cheerioJS and isomorphic-fetch packages

Learnt how to use puppeteer to navigate a page

Learnt how to parse a webpage using cheerio

Continued development

Some things i would love to improve in my next scraper includes:

Building an interface for interacting with the script, right now it's a cli application which is not very end-user friendly. One idea i have is creating a telegram bot the user can interact with

Converting the data into a csv file and pushing it to a google sheet via their api

Making the bot faster. In my

scraper-pptrwhere i used puppeteer, the script scrapes at 1 lead per 2 seconds which i feel is slow because it visits the lead pages sequentially. I believe if i scrape the links to all the leads first before visiting them i can scrape data much faster by opening multiple pages at the same time.

Let's connect...

Github - Jeffrey Onuigbo

Frontend Mentor - @jeffreyon

Twitter - @jeffreyon_